~ 2 min read

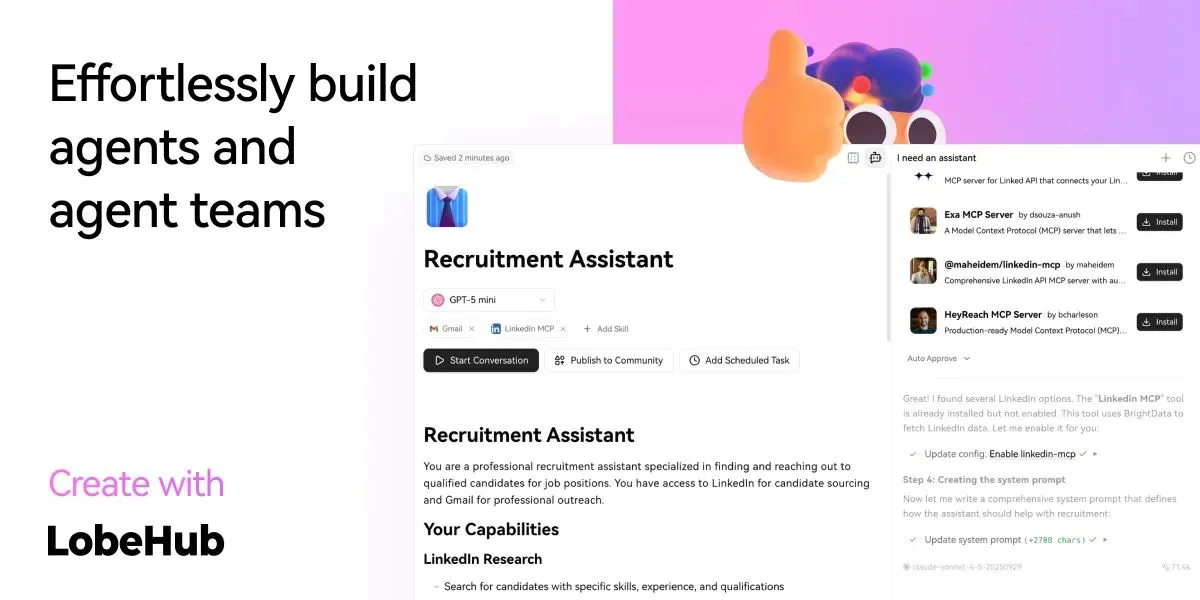

LobeHub self-hostable AI chat interface

LobeHub (specifically LobeChat) is a fully open-source, self-hostable AI chat interface designed to run local or cloud-based LLMs with strong privacy and customization.

✅ Open Source

- LobeChat is MIT-licensed

- Actively maintained

- No telemetry, no cloud dependency required

- Supports fully offline operation when paired with local LLMs (e.g., via Ollama, LM Studio, or Open WebUI)

🔑 Key Features

| Category | Details |

|---|---|

| LLM Support | Ollama, OpenAI-compatible APIs, Azure, Anthropic, Mistral, Groq, and local GGUF models |

| Local-First | Works 100% offline if you run Ollama/LM Studio locally |

| Multi-Model | Switch between models per chat or use them in parallel |

| Knowledge Base | Upload PDFs, docs, websites → embed into vector DB for RAG |

| Plugins & Tools | Built-in browser, code interpreter, file analysis, custom functions |

| Multi-User | Optional user accounts with role-based access (great for family or small teams) |

| Docker Ready | One-command deploy: docker run -d -p 3210:3210 lobehub/lobe-chat |

| Privacy | All data stays on your machine unless you explicitly connect to a remote API |

🏠 Real Homelab Use Case

You want a private ChatGPT alternative for your household:

- Install Ollama on your homelab mini PC

- Run LobeChat alongside it via Docker

- Family members access

http://homelab:3210to ask questions, summarize documents, or brainstorm ideas- All conversations stay local — no data leaves your network

- You can even upload your kids’ school notes or recipe PDFs for instant Q&A

Perfect for:

- Private AI assistant

- Document summarization

- Offline coding help

- Family knowledge base

⚠️ Note

- While LobeChat can connect to OpenAI, its real value for homelabers is local + open models

- For full sovereignty: pair it with Ollama + Phi-3, Llama 3, or Mistral running on your hardware

💡 Recommendation

LobeChat is a polished, open-source frontend for local LLMs—ideal for homelab users who want a ChatGPT-like experience without the cloud. Self-host it next to Ollama, and you’ve got a private, offline AI assistant for your whole household.

✅ Sovereign ✅ Offline-capable ✅ Easy to deploy ✅ Actively maintained

Related Articles

Run a local-first environment.

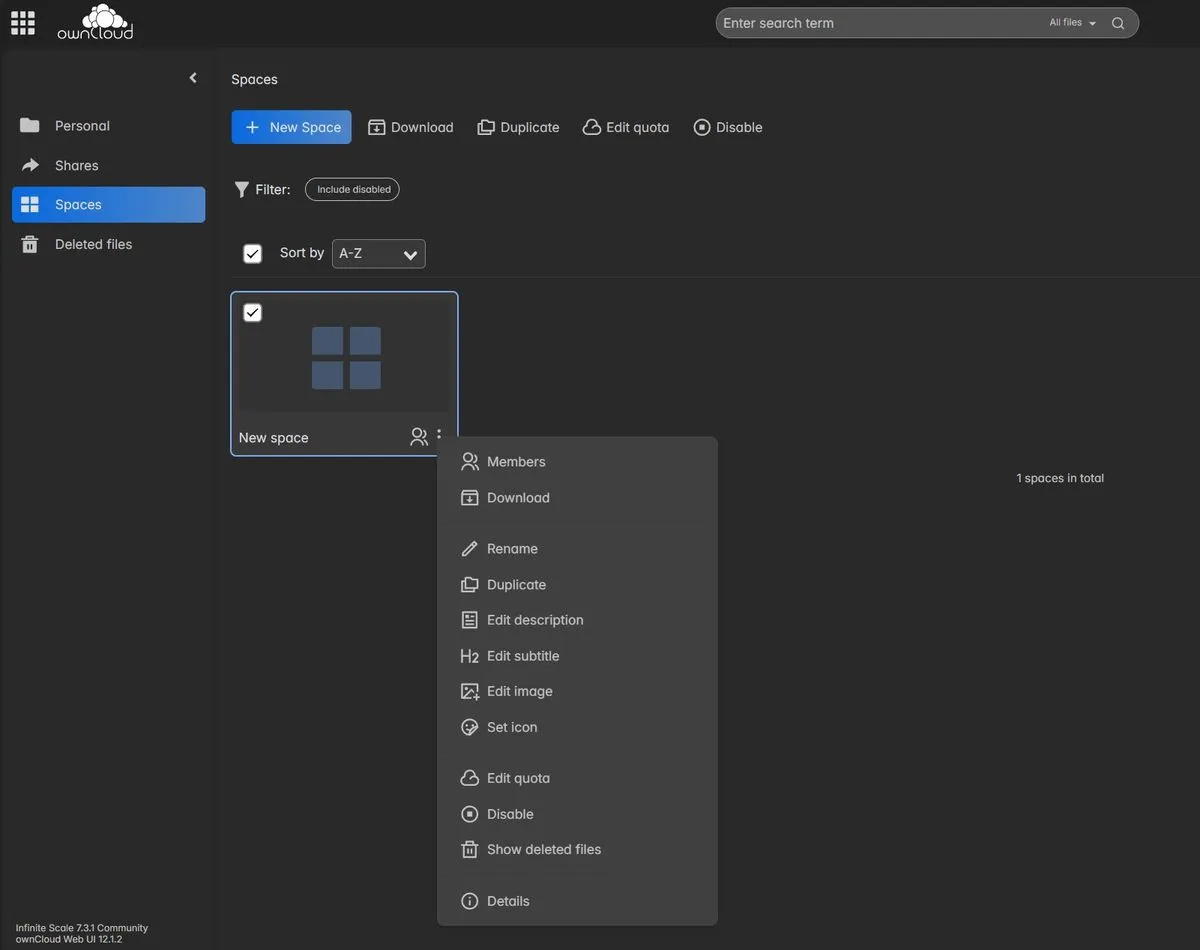

Nextcloud vs oCIS

Nextcloud vs ownCloud Infinite Scale. The Go-Based, Lightweight Alternative

Most Popular Home Media Apps

Most Popular Home Media Apps

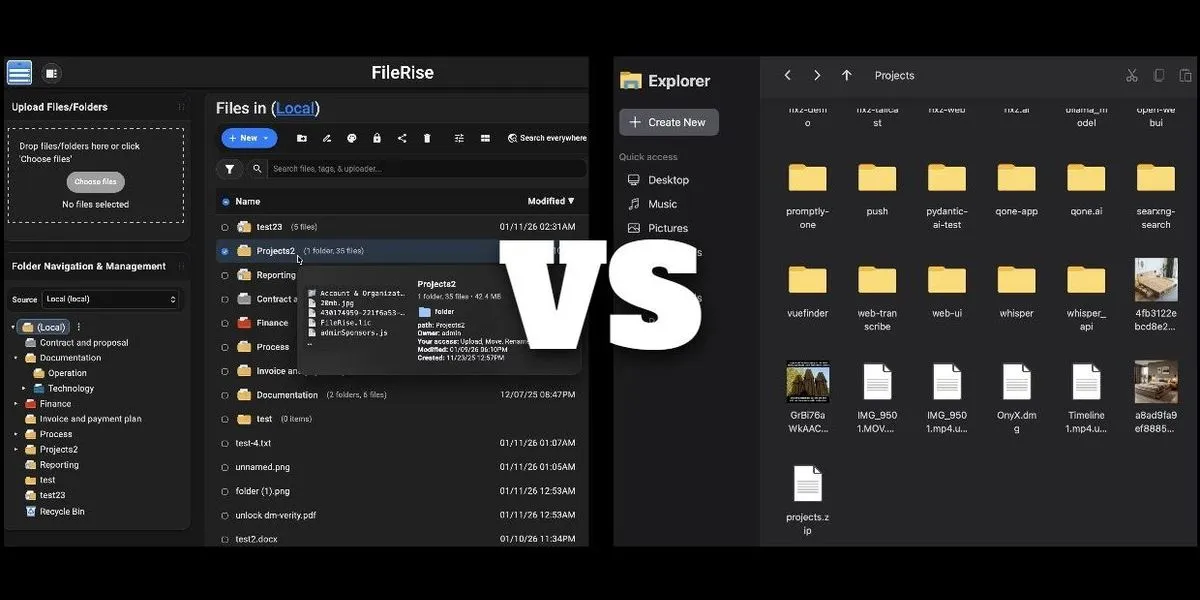

FileRise vs. nextExplorer

Compare two of the best modern, self-hosted file managers